If you’re searching for an Ollama desktop app, Ollama GUI, Ollama client, or a fast Ollama chat interface for running local AI models on macOS, Windows, or Linux, this guide introduces Askimo App as an option worth considering. Askimo offers a native Ollama desktop experience for local models including Llama 3, Llama 3.1, Llama 3.2, Mistral, Phi 3, Gemma, and hundreds of other Ollama models, while also supporting cloud providers like OpenAI, Claude, and Gemini in a unified interface.

TL;DR: Install Ollama, download the Askimo App GUI, configure Askimo to connect to

http://localhost:11434, select your preferred Ollama model (llama3,mistral,phi3,gemma), and start chatting with fully searchable, organizable, and exportable local AI conversations.

Why Use an Ollama Desktop GUI Instead of CLI or Web UI?

While Ollama’s command-line interface (CLI) is powerful for quick prompts, a dedicated Ollama desktop app like Askimo adds essential productivity features for serious AI workflows:

- Persistent conversation history across all your Ollama chat sessions

- In-chat full-text search to find messages within your Ollama conversations

- Star and pin important Ollama conversations for instant access

- Export Ollama chats to Markdown, JSON, or HTML for documentation, notes, or team sharing

- One-click provider switching between local AI providers and cloud AI providers

- Project-aware RAG for context-aware conversations with your projects using local Ollama models

- Custom themes, keyboard shortcuts, and structured workflows for Ollama

- Lazy loading for massive chats (Askimo only loads older Ollama messages when you scroll up)

Askimo transforms local Ollama model experimentation from scattered terminal commands into a repeatable, professional desktop workflow.

Why Askimo’s Ollama Desktop Performance Outperforms Web UIs:

Most “Ollama desktop” apps and Ollama web UIs render the entire conversation into the DOM. As your Ollama chats grow into hundreds or thousands of messages with local models like Llama 3 or Mistral, memory usage spikes and the Ollama GUI begins to lag. Scrolling stutters, input becomes delayed, and rendering slows down.

Askimo’s Ollama desktop client takes a different approach. It’s built with a native-first, resource-aware design optimized specifically for Ollama workflows: messages stream in as you chat with your local models, and older history stays virtualized. Older Ollama messages are loaded only when you scroll up. This keeps memory usage low and Ollama desktop performance consistently smooth, even during long research sessions or large coding conversations with Llama 3.2, Mistral, or Phi-3.

Askimo Ollama Desktop vs Terminal CLI vs Web UI Comparison

| Workflow Feature | Ollama Terminal Only | Generic Ollama Web UI | Askimo Ollama Desktop |

|---|---|---|---|

| Multi-provider support | Manual scripts | Usually Ollama-only | Built-in provider switcher |

| Chat history | No automatic logs | Basic/varies | Organized & searchable |

| Export options | Manual copy | Rare | Markdown, JSON & HTML export |

| Star / organize chats | Not available | Limited | Favorites + structured sessions |

| Local privacy | Fully local | Depends on tool | Local AI + optional cloud |

| Cross-platform | Linux/macOS/Win | Varies widely | Linux/macOS/Win |

Step 1: Install Ollama on macOS, Windows, or Linux

Ollama runs locally on macOS, Windows and Linux.

- macOS

Download the installer: https://ollama.com/download/mac

- Windows

Download the installer: https://ollama.com/download/windows

- Linux

curl -fsSL https://ollama.com/install.sh | shTest your install:

ollama run llama3If a model isn’t downloaded yet, Ollama will fetch it automatically.

Step 2: Install Askimo App (Ollama GUI)

Askimo App binaries:

Open the app (Applications folder / Start Menu) and proceed to provider setup.

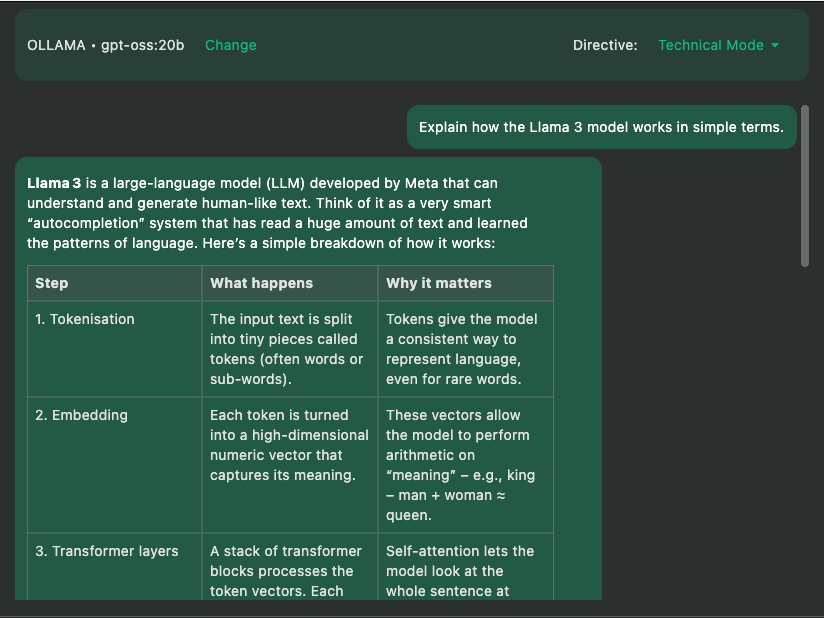

Step 3: Connect Askimo App to Your Ollama Server

Askimo auto-detects the default Ollama endpoint:

http://localhost:11434If you changed ports or remote access, update it manually.

- Open Askimo App

- Go to Settings → Providers

- Select Ollama

- Ensure Endpoint =

http://localhost:11434 - Choose a model (e.g.

llama3,mistral,phi3,gemma,gpt-oss:20b, etc) - Save & start chatting

Switch Ollama models instantly with no terminal commands required.

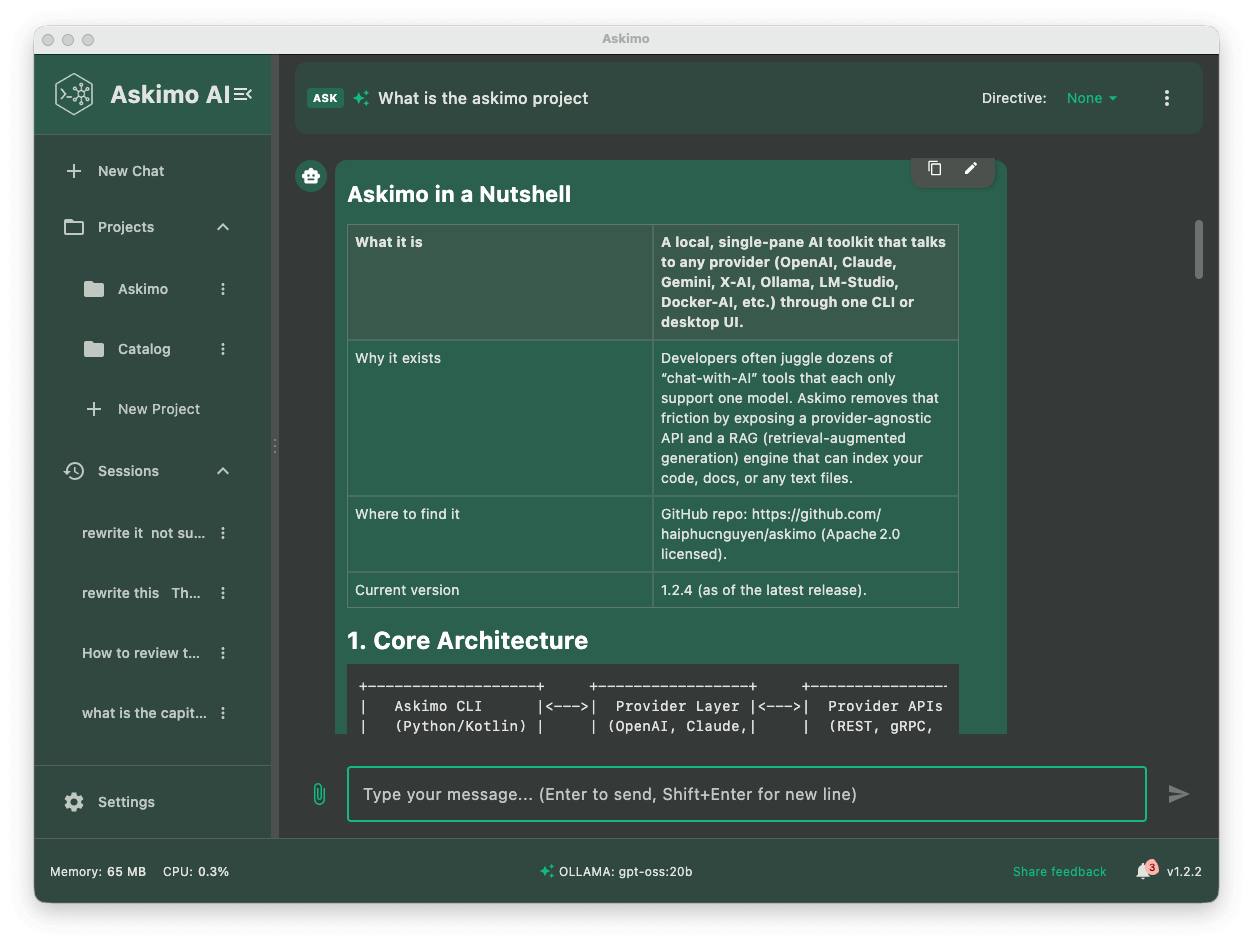

Askimo Ollama Desktop App Feature Deep Dive

Below is a deeper look at what makes Askimo more than “just another Ollama wrapper”. Feel free to slot in screenshots where indicated.

1. Performance & Resource Efficiency for Ollama Chat

- Lazy loading of older Ollama messages (virtualized history for massive chats)

- Streaming Ollama responses with smooth incremental rendering

- Minimal DOM footprint vs. Ollama web wrappers that re-render entire threads

- Efficient memory usage for Ollama research sessions that span hundreds of turns

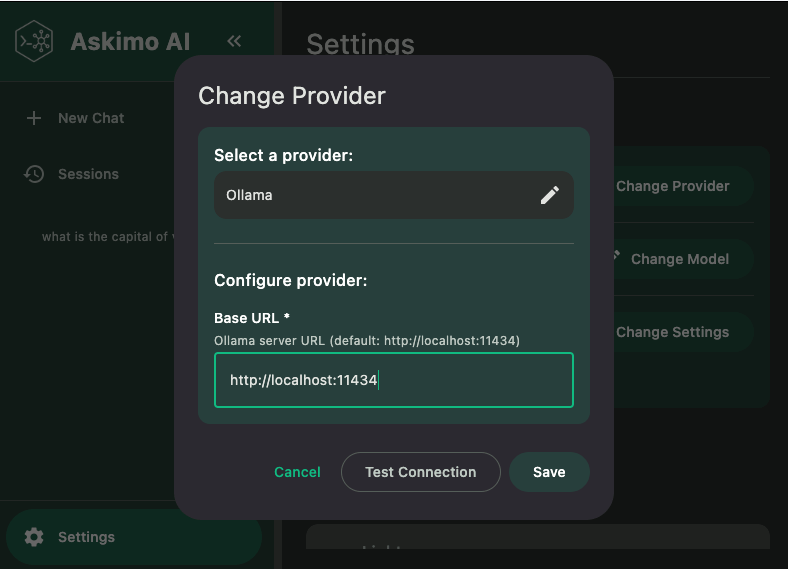

2. Multiple AI Models & Ollama Model Management

- Instantly switch between local AI providers (Ollama and others) and cloud providers (OpenAI, Claude, Gemini)

- Quick model selector (e.g. swap from

llama3→mistralfor speed) - Automatic endpoint detection for local Ollama

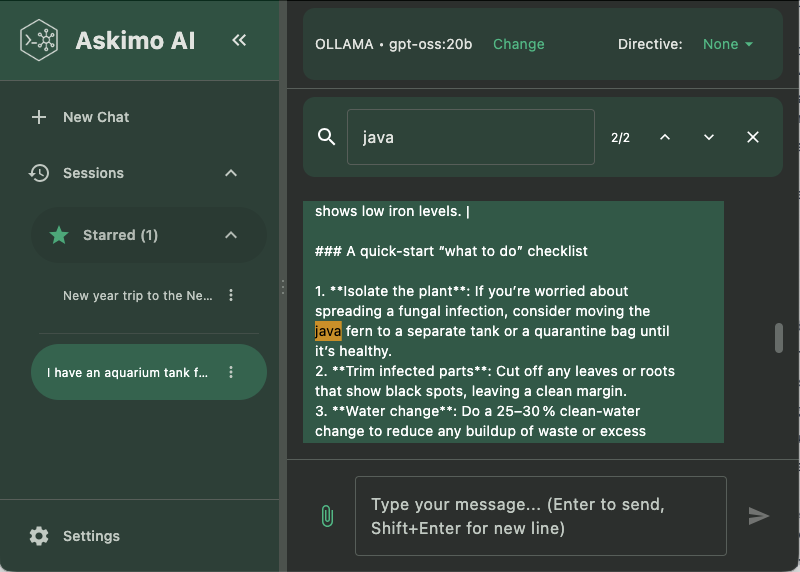

3. Search & Knowledge Organization for Ollama Conversations

- In-chat full-text search to find any message within your Ollama conversation sessions

- Fast keyword filtering to quickly locate specific information in long chats

- Star / pin important Ollama threads for fast recall and easy access

4. Chat Thread Utilities for Ollama Sessions

- One-click export to Markdown, JSON, or HTML (clean, dev-friendly formatting)

- Shareable Ollama transcripts for docs / PRDs / specs

- Star, unstar, and reorder important Ollama sessions

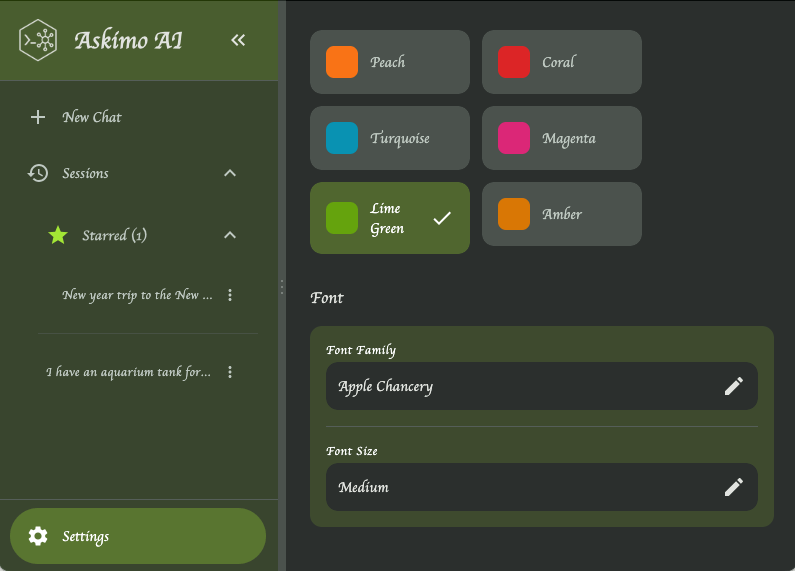

5. UI, Personalization & Accessibility for Ollama Desktop

- Light & dark themes (theme switching without reload)

- Font customization (readability tuning for long Ollama sessions)

- Keyboard shortcuts for: new chat, provider switch, search focus, export

- Smooth scroll and layout stability (no jumpiness during Ollama streaming)

6. Privacy & Local-First Workflow with Ollama

- Local model responses never leave your machine (when using local AI providers like Ollama)

- Cloud providers only when explicitly selected

- Export stays local unless you choose to share externally

- No silent background sync or analytics on content

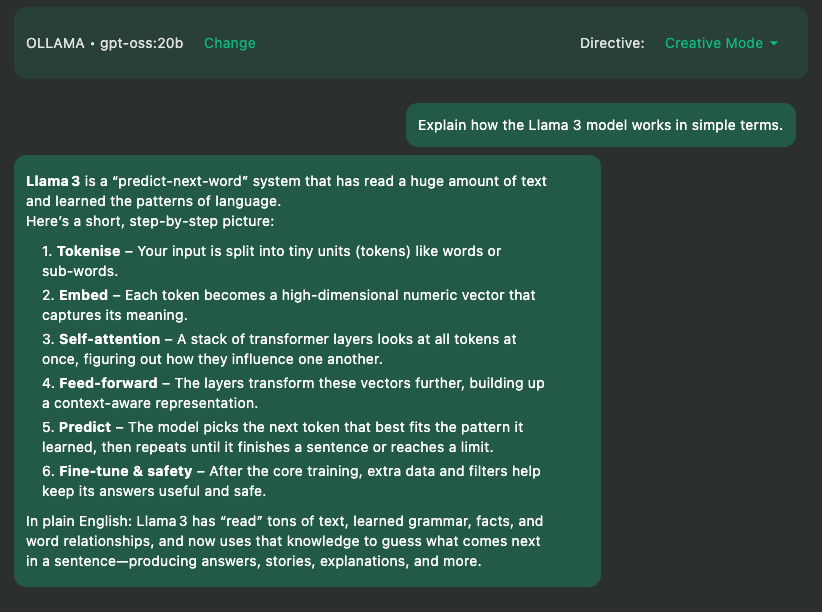

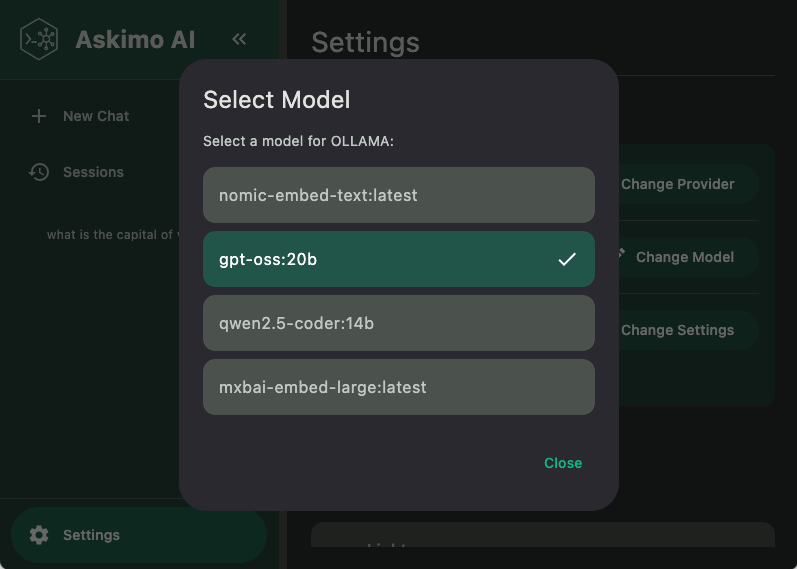

7. Custom Directives in Askimo for Ollama Models

Custom Directives let you define how the AI behaves when running local AI models. Instead of retyping long instructions every time you start a new chat, you set your preferences once and Askimo applies them automatically across all conversations.

-

Consistent behavior for local models Keep your Llama, Mistral, Gemma, or Phi-3 chats aligned with the tone, style, and level of detail you prefer.

-

Task-specific presets for repeated workflows Create directives for coding, debugging, summarizing papers, generating documentation, or anything else you routinely do with local AI models.

-

Instant switching without prompt clutter Change directives in one click instead of pasting paragraphs of instructions into every message.

-

Optimized for long sessions with local inference Directives help local models stay focused and reduce back-and-forth noise, making long research or coding sessions smoother and more efficient.

8. Project-Aware RAG with Local Ollama Models

Askimo’s RAG (Retrieval-Augmented Generation) feature lets you chat with your entire project using local Ollama models. Instead of manually copying content into prompts, Askimo automatically retrieves relevant context from your project files. Read our complete guide to chatting with documents using Ollama RAG for a full walkthrough.

-

Context-aware conversations with your projects Ask questions about your work and get answers grounded in your actual files using Llama 3, Mistral, or other Ollama models. Works with code projects, documentation, research papers, writing projects, and more.

-

Automatic context retrieval Askimo indexes your project files and pulls relevant content into the conversation context automatically.

-

Privacy-first local RAG Your files never leave your machine when using local Ollama models with RAG, unlike cloud-based assistants.

-

Multi-file understanding Ask questions that span multiple files, and Ollama models will receive relevant context from across your entire project.

Example use cases:

- Software projects: “Explain how the authentication flow works” or “Where is the user data validated?”

- Documentation: “Summarize the key changes in the API documentation” or “What’s the installation process?”

- Research papers: “What methodology did I use in chapter 3?” or “Find all references to climate data”

- Writing projects: “What themes appear across all chapters?” or “List all character interactions with John”

- Technical specs: “What are the system requirements?” or “How does module A connect to module B?”

Features Unique to Askimo (Compared to Other Ollama GUIs)

- Unified multiple AI models chat (local + hosted)

- Structured organization with search, favorites, and export options

- Native desktop experience with macOS and Windows installers

- Multiple export formats (Markdown, JSON, HTML) designed for developers and research workflows

- Project-aware RAG for conversations with your projects using local Ollama models (your files stay private) — learn how to set it up

- Seamless extensibility through a shared CLI and Desktop architecture

Other Ollama interfaces focus mainly on providing a chat window. Askimo is designed for long-term productivity, structured knowledge, and fast workflows across both local and cloud models.

Common Search Questions (FAQ)

Does Ollama have an official desktop GUI?

No. Ollama provides a CLI and a local API, but no official GUI. Askimo App is a full-featured desktop client that connects to Ollama locally.

What is a good Ollama desktop app for macOS or Windows?

Askimo offers multiple AI models switching, search, starring, export, and a polished UX designed for everyday use on both macOS and Windows.

Can I use Ollama models and cloud models together?

Yes. Askimo lets you run local AI models (including Ollama), then switch to OpenAI, Claude, or Gemini with a single click.

Is my data private when using Askimo with Ollama?

Yes. All local inference happens through your Ollama installation. Askimo only communicates with your local endpoint when using Ollama. Learn more about how Askimo protects your data and doesn’t collect, exchange, or store sensitive information.

Why are responses slow with Ollama?

Large models (like bigger Llama 3 variants) require strong hardware. Choose smaller models such as mistral or phi3 for faster responses, or upgrade CPU/GPU.

How do I change Ollama models in Askimo?

Open Providers → Ollama, then update the model name. You can pre-download a model with:

ollama pull mistralCan I run Askimo + Ollama offline?

Yes. After models are downloaded, both Askimo and Ollama work entirely offline.

Can I use Askimo with my projects using Ollama?

Yes. Askimo’s RAG feature lets you chat with your entire project using local Ollama models. Whether it’s code, documentation, research papers, or writing projects, your files are indexed locally and relevant context is automatically added to conversations, keeping everything private on your machine. See our full RAG guide for setup instructions and real-world examples.

Troubleshooting

Model does not respond

Check if Ollama service is running:

ollama listIf empty, run a model to start the server:

ollama run mistralEndpoint unreachable

Confirm port 11434 is active. If you customized the port, update Askimo’s provider settings.

Slow responses

Use a smaller model or close resource-heavy applications.

Missing model error

Pull it explicitly:

ollama pull phi3Askimo vs Other Ollama Desktop Apps & Ollama GUIs

When evaluating Ollama desktop clients and Ollama GUI options for macOS, Windows, or Linux, here’s how Askimo compares:

Askimo Ollama Desktop vs Open WebUI:

- Askimo: Native desktop app with optimized performance for Ollama chat

- Open WebUI: Browser-based Ollama interface requiring Docker setup

- Askimo advantage: Multi-provider support (Ollama + ChatGPT + Claude + Gemini) and project-aware RAG

Askimo vs Ollama Terminal CLI:

- Askimo: Full conversation history, search, export, RAG, and organization for Ollama chats

- CLI: Basic prompt/response with no persistence or Ollama chat management

- Askimo advantage: Professional Ollama workflow with keyboard shortcuts and themes

Askimo vs Generic Ollama Web UIs:

- Askimo: Lazy-loaded Ollama messages for smooth performance even with 1000+ message chats

- Web UIs: Full DOM rendering causes lag in long Ollama conversations

- Askimo advantage: Native desktop speed and resource efficiency for Ollama models

For users running Llama 3, Mistral, Phi-3, Gemma, or other Ollama models locally, Askimo offers a comprehensive Ollama desktop experience in 2025.

Final Thoughts

Askimo brings Ollama to the desktop with speed, structure, and zero friction. Local models stay private. Your conversations stay organized. And your prompts become reusable knowledge instead of throwaway commands.

Try Askimo today: 👉 https://askimo.chat/download/

Have feedback or feature requests? Star the repo and open an issue.